1. Practicing with Cameras (15 Points)

| |

1.1. 360-degree Renders (5 points)

| |

100%|██████████| 36/36 [00:01<00:00, 19.27it/s]

Result:

1.2 Re-creating the Dolly Zoom (10 points)

Dolly (zoom-with-compensation) main formula

Given a vertical field of view $\mathrm{fov}$ (in degrees), the camera distance is set to

$$ d(\mathrm{fov}) = \frac{2}{\tan\left(\frac{\mathrm{fov}}{2}\right)} $$

| |

| |

Result:

2. Practicing with Meshes (10 Points)

2.1 Constructing a Tetrahedron (5 points)

| |

100%|██████████| 100/100 [00:00<00:00, 156.75it/s]

Result:

- Number of vertices: 4

- Number of faces: 4

2.2 Constructing a Cube (5 points)

| |

100%|██████████| 100/100 [00:00<00:00, 159.00it/s]

Result:

- Number of vertices: 8

- Number of triangle faces: 12

3. Re-texturing a mesh (10 points)

| |

100%|██████████| 36/36 [00:00<00:00, 156.98it/s]

Result:

- Color 1: Blue

- Color 2: Red

4. Camera Transformations (10 points)

| |

R_relative and T_relative are another affine transformation pair that defines the camera’s pose relative to the cow model.

The formula to compute the new camera extrinsics is:

R_relative and T_relative are another affine transformation pair that defines the camera’s pose relative to the cow model.

The formula to compute the new camera extrinsics is:

$$ R_{\text{new}} = R_{\text{relative}},R_{\text{cow}} \ T_{\text{new}} = R_{\text{relative}},T_{\text{cow}} + T_{\text{relative}} $$

The points will be transformed from world coordinates to camera coordinates using the new extrinsics.

$$ P_{\text{camera}} = R_{\text{relative}} \cdot (R_{\text{cow}} \cdot P_{\text{world}} + T_{\text{cow}}) + T_{\text{relative}} $$

Results:

5. Rendering Generic 3D Representations (45 Points)

| |

5.1 Rendering Point Clouds from RGB-D Images (10 points)

| |

100%|██████████| 36/36 [00:00<00:00, 117.94it/s]

100%|██████████| 36/36 [00:00<00:00, 134.54it/s]

100%|██████████| 36/36 [00:00<00:00, 89.51it/s]

Results:

5.2 Parametric Functions (10 + 5 points)

| |

100%|██████████| 36/36 [00:00<00:00, 39.14it/s]

100%|██████████| 36/36 [00:00<00:00, 240.69it/s]

100%|██████████| 36/36 [00:01<00:00, 27.54it/s]

100%|██████████| 36/36 [00:01<00:00, 23.55it/s]

100%|██████████| 36/36 [00:30<00:00, 1.19it/s]

100%|██████████| 36/36 [00:36<00:00, 1.01s/it]

Result:

5.3 Implicit Surfaces (15 + 5 points)

| |

100%|██████████| 36/36 [00:00<00:00, 136.40it/s]

100%|██████████| 36/36 [00:00<00:00, 147.21it/s]

Results:

6. Do Something Fun (10 points)

“Oiiaioooooiai Cat!!!!!” (https://skfb.ly/pyYNA) by baby_saja is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

| |

100%|██████████| 36/36 [00:00<00:00, 74.60it/s]

Result:

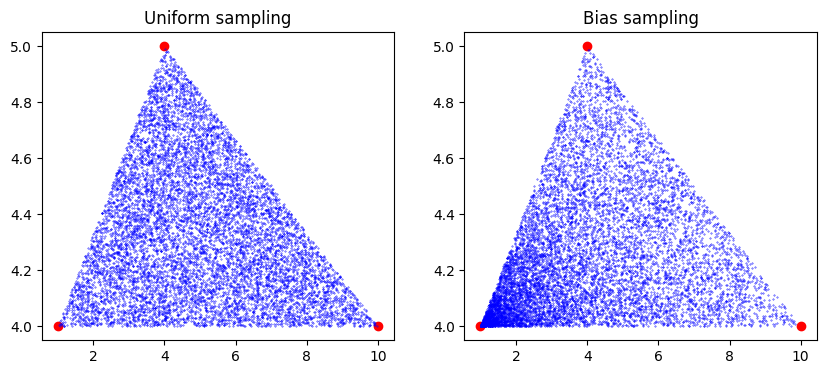

(Extra Credit) 7. Sampling Points on Meshes (10 points)

| |

| |

| |

11%|█ | 4/36 [00:00<00:01, 16.23it/s]

100%|██████████| 36/36 [00:01<00:00, 19.01it/s]

100%|██████████| 36/36 [00:01<00:00, 18.42it/s]

100%|██████████| 36/36 [00:01<00:00, 18.06it/s]

100%|██████████| 36/36 [00:01<00:00, 19.17it/s]

Result of sampling 1000 points from the cow mesh with different combinations of area and angle based sampling:

Uniform face sampling and uniform barycentric coordinate sampling:

Uniform face sampling and biased barycentric coordinate sampling:

Biased face sampling and uniform barycentric coordinate sampling:

Biased face sampling and biased barycentric coordinate sampling: